Stay connected with BizTech Community—follow us on Instagram and Facebook for the latest news and reviews delivered straight to you.

The rapid rise of artificial intelligence has changed the digital world, filling social media, news feeds, and creative platforms with fake content that is becoming harder and harder to tell apart from real work. Graphite studies show that by the end of 2024, AI-generated content has exceeded human-generated content. By April 2025, more than 74% of the sampled web sites had AI elements. This explosion, which tools like ChatGPT have helped, has led to a new age of doubt: How can people tell what’s true in a world where algorithms rule?

As we get closer to 2026, people are getting tired with AI material, and surveys show that people are more worried about authenticity. But blockchain and decentralised technologies have a strong counter: proof-of-origin systems and verifiable credentials that put human-made content first. While authorities work on labelling rules, the real fight is to win back people’s trust. Can Web3 capabilities like zero-knowledge proofs and on-chain authentication help stop misinformation and deepfakes?

This merging of AI and blockchain isn’t just a guess; it’s a clear response to a loss of trust. In September 2025, Pew Research found that 76% of Americans think it’s crucial to be able to recognise AI content, but only 47% feel like they can. Adrian Ott, the head of AI at EY Switzerland, compares AI content to “processed food.” At first, it was new, but now it’s everywhere and predictable, which makes people want “local, quality” alternatives with clear origins. In this setting, blockchain’s unchangeable ledgers and cryptographic proofs become ways to confirm the truth from the beginning, moving the focus from finding fakes to demonstrating authenticity.

The Rise of AI Content Fatigue

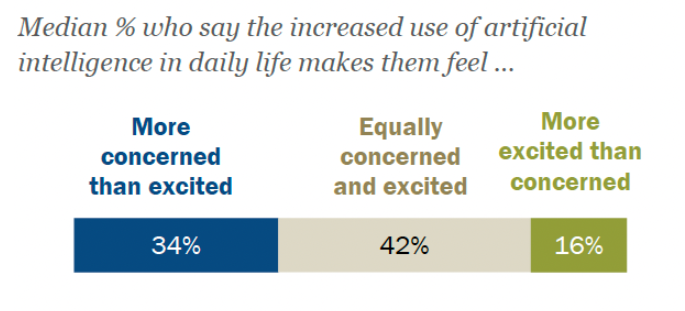

The novelty of AI generation has worn off. Users say they are tired after years of enthusiasm over viral deepfakes, AI art auctions, and automated writing. Pew’s global survey from the spring of 2025 found that the median person was 34% more worried than excited about AI’s ascent, and 42% were equally worried and excited. This “AI slop”—Merriam-Webster’s Word of the Year for 2025—fills feeds with low-quality, repeated content that makes the quality worse.

“Like processed food taking over markets before a return to organic, people want to know the story behind content—the artist’s intent, the creator’s humanity,” says Ott. Platforms make this worse: Algorithmic curation puts interaction first, often choosing flashy AI results above more complex human effort. What happened? Users lose interest and look for signs of authenticity.

In Bitcoin and Web3, this tiredness shows up as a drop in speculative NFT volumes (down 66% from January highs in market cap) as purchasers put utility ahead of excitement. But it also opens up new doors: There is a growing need for verifiable human creation, from art that can be proven to journalism that is open and honest.

Problems with Finding Fake Media

It’s hard to find AI content. Some deepfakes show signs of artefacts, including lighting or audio that isn’t right. But advanced models like Grok’s picture gen or OpenAI’s Sora make almost perfect images. Pew data shows the gap: people feel much less confident in their ability to detect something than they do in how important it is.

Ott points up two risks: being too trusting in fake news that leads to scams, or being too cynical and calling real events “AI-generated” to fit stories. Deepfakes are not new; Photoshop came out before them. But AI makes it much easier to make a lot of them, which makes it cheap to spread false information.

Regulators respond by putting labels on things. The EU’s AI Act says that generated media must have disclosures, while proposals in the U.S. are looking at watermarks. But it’s easy to avoid—bad actors take away metadata. Ott says that the opposite is true: certify real content at capture, which proves origin over post-facto detection.

Blockchain’s Answer is Proof of Origin and Proof of Authenticity

Blockchain gives proactive solutions through records that can’t be changed and cryptographic evidence. Time’s 2025 Best Invention in Crypto/Blockchain, Swear, is a good example of this. The platform that Jason Crawforth built puts “digital DNA” in all types of material, such videos, audio, and photographs, from the start. This links to blockchain ledgers for proof that the media has not been changed.

“Any change can be seen by comparing it to the original,” Crawforth says. Swear is used in security tools like body cameras and drones, as well as creator tools. It proves “this is real” instead of questioning “is this fake?” It focusses on areas where integrity is important for the mission, such journalism, evidence, and business.

This “proof of origin” fits with Buterin’s idea of selective disclosure: ZKPs check attributes (such timestamp and device) without showing everything. The 2026 Glamsterdam/Heze-Bogota splits for Ethereum make this better by making private transactions bigger.

World ID (iris-based uniqueness) and Concordium (ZK age verification) are two examples of projects that go beyond identity to fight bots while keeping users anonymous. In gaming, blockchain-verified assets make sure that things are rare. In social media, on-chain credentials stop people from pretending to be someone else.

Responses from Institutions and Platforms

Big Tech uses hybrids: Google’s Wallet uses ZKPs to prove age and identity and makes libraries open source. Platforms are under pressure: X’s Grok problems show how unclear algorithms are; Buterin’s ZKP pitch for fair rankings is getting more attention.

Regulators want things to be clear: Switzerland’s changes to surveillance caused a backlash that froze Proton investments, and the UK’s age rules favour ZKP tools. Stablecoin audits make verifiable systems stronger in a roundabout way through the U.S. GENIUS Act.

Ott says that platforms are to blame: users will leave if filters don’t show human content. New tools like browser extensions and on-chain labelling provide users more options, but for things to work on a larger scale, they need to be integrated.

2026: A Turning Point for Trust

As we move into 2026, AI material is everywhere—on more than 74% of web pages—so we need strong defences. Blockchain’s edge: proactive authenticity through proofs of origin, not reactive detection. Swear’s business growth, Ethereum’s privacy improvements, and the rise of ZKP all show that things are moving forward.

But there are problems: making it work with a lot of media, teaching users how to verify, and finding a balance between privacy and compliance. It is harder to regulate deepfakes because they might be used for bad or good.

There are several chances for Web3: Certify artists, tokenise human work, and develop trustless social. As Ott says, “human-crafted” labels that look like “organic” could make blockchain more authentic.

Conclusion

2026 is a turning point for internet trust in the age of AI. People want verifiable human material because they are tired of it; blockchain provides that through proof-of-origin and ZKPs. Decentralised tools, like Swear’s inventions and Ethereum’s forks, fight monitoring and give people back their freedom. Platforms need to change their filters and certifications, or they will lose users. In this blurry world, blockchain is more than simply a technology; it’s a defender of the truth. Buterin is a strong supporter of self-sovereignty. In 2026, digital authenticity could change from finding fakes to proving real.